How AI Is Programmed: A Practical Guide for Developers

Explore the step by step process of how AI is programmed, from data collection and model design to training, testing, and deployment, with practical tips for developers and researchers.

How AI is programmed refers to the process of designing and implementing data pipelines, models, and learning rules that enable artificial intelligence systems to perform tasks.

Overview of AI Programming

AI programming is the process of designing and implementing algorithms, data pipelines, and learning rules that enable machines to perform tasks that typically require human intelligence. At its core, it begins with a problem statement, a measurable objective, and access to data that can guide the model toward the desired behavior. If you are exploring how is ai programmed, think of it as a loop: collect data, choose a learning approach, train a model, evaluate results, adjust parameters, and deploy. The quality of data, the clarity of the objective, and the choice of learning paradigm all shape the final performance. In practice, teams balance accuracy, efficiency, and safety constraints while staying aligned with user needs and ethical considerations. This block lays the foundation for the rest of the guide.

Core Concepts in AI Programming

The process rests on three core pillars: data, models, and objectives. Data provides the material that models learn from, while objectives define what success looks like. Models are the mathematical representations that transform inputs into outputs. In practice, developers choose learning paradigms such as supervised, unsupervised, or reinforcement learning depending on the task. A clear understanding of loss functions, optimization, and regularization helps tune models toward robust performance. Throughout, maintain alignment with user needs and safety constraints.

Data and Data Quality

AI programming starts with data, and data quality often determines success more than any fancy algorithm. This section explains where data comes from, how it's prepared, and why labeling, cleaning, and balancing matter. Real world data is messy, biased, and incomplete, so teams invest in data collection strategies, versioning, and provenance tracking. Before training, practitioners define data schemas, split data into training and validation sets, and implement data augmentation techniques carefully to avoid leakage. Emphasize privacy, compliance, and ethical handling of sensitive information. When data quality improves, models learn more meaningful patterns and generalize better to unseen situations. The goal is to create datasets that reflect real user needs while remaining fair, inclusive, and representative across contexts.

Model Design and Selection

Design choices shape what AI can and cannot do. Start by selecting a model family suited to the task, such as linear models for simple relationships or neural networks for complex patterns. Consider architecture, depth, width, and connectivity, but balance these against compute constraints and latency requirements. Define an objective function that aligns with the problem, and choose an optimization method that reliably reaches good solutions. Modular, reusable components help teams experiment quickly, while clear abstractions aid collaboration with researchers and engineers. Remember that no single model fits every problem; iterative experimentation and careful evaluation guide the final choice.

Training, Validation, and Testing

Training is the phase where the model learns from data by adjusting parameters to minimize errors. Validation uses a separate dataset to monitor progress and detect overfitting. Testing then assesses performance on unseen data to estimate real world behavior. Effective training requires appropriate loss functions, learning rates, and regularization strategies. Keep track of experiments with versioned configurations and reproducible pipelines. Avoid data leakage between training, validation, and test sets, which can produce overly optimistic results. In practice, schedules for training duration, early stopping, and checkpointing help manage resources while preserving model quality.

Evaluation Metrics and Benchmarks

Quantitative metrics quantify how well an AI system meets its goals. Depending on the task, you might use accuracy, precision, recall, F1 score, BLEU, ROUGE, or mean squared error, among others. Always pair metrics with human evaluation to capture aspects that numbers miss, such as fairness, robustness, and interpretability. Benchmarks establish a baseline for comparison and track progress over time. When reporting results, describe data distribution, evaluation protocol, and potential limitations to maintain transparency and trust. The best practice is to choose a small, stable set of metrics that align with real user impact.

Deployment and Monitoring

Bringing a model into production involves packaging, serving, and observing its behavior in real time. Decide on a serving architecture, latency targets, and monitoring dashboards that alert teams to drifts in data or performance degradation. Implement safeguards such as input validation, anomaly detection, and rate limiting to protect users. Version control of models and continuous integration pipelines support safe updates. Ongoing monitoring includes retesting with fresh data, auditing for bias, and documenting changes for governance. A well designed deployment plan reduces risk and ensures the model remains aligned with its intended purpose.

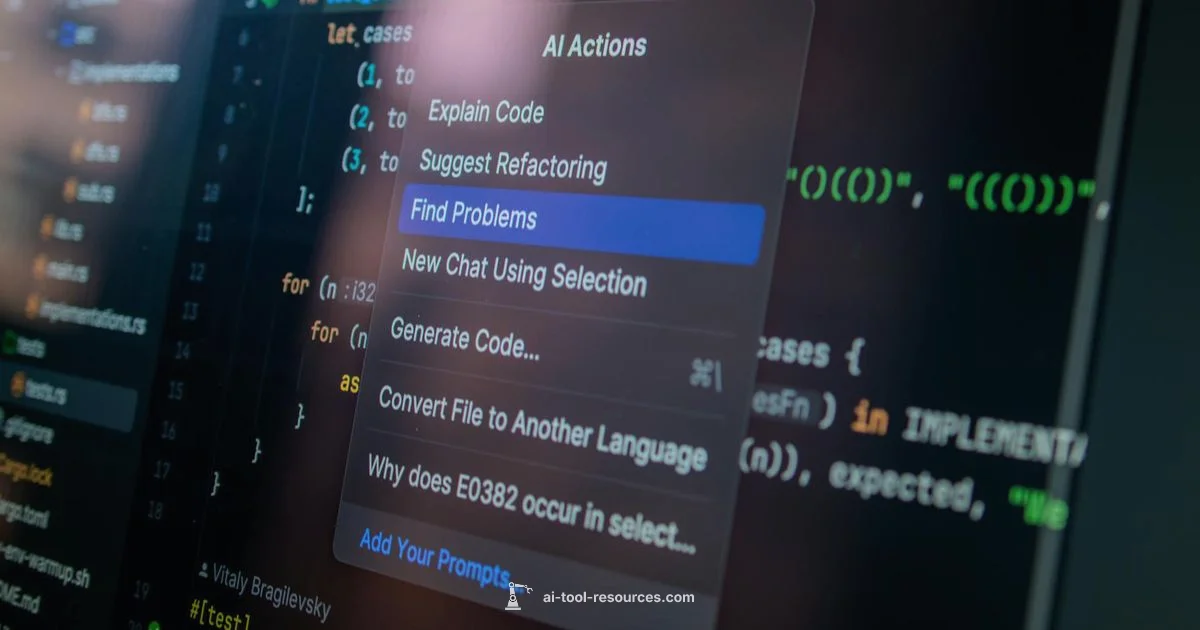

Tools and Frameworks You Might Use

Developers rely on a ecosystem of tools and frameworks that support data processing, model training, and deployment. Popular choices span data manipulation, machine learning libraries, experiment tracking, and deployment platforms. While this guide does not endorse specific products, be aware that common patterns include modular pipelines, hardware acceleration strategies, and scalable cloud or on premise infrastructure. Invest time in learning version control, containerization, and reproducible environments to streamline collaboration and reduce friction across teams. The right toolset accelerates experimentation and improves reliability in production.

Safeguards, Ethics, and Governance

Ethical AI programming requires proactive attention to safety, fairness, privacy, and accountability. Build bias checks into data pipelines, employ diverse evaluation cohorts, and maintain transparent documentation of decisions. Establish governance policies that define who can approve model deployments and how models are audited over time. Consider user impact, potential harms, and modes of redress. Privacy by design, data minimization, and explainability help users trust AI systems. In short, responsible AI programming is as much about process and governance as it is about algorithms.

Common Pitfalls and How to Avoid Them

Even experienced teams encounter challenges when programming AI. Common pitfalls include overfitting to training data, data leakage, and chasing novelty over reliability. Insufficient data diversity can produce biased models that fail in real contexts. Relying on a single metric can obscure important tradeoffs, while insufficient monitoring allows drift to go unnoticed. To mitigate these issues, adopt a diverse data strategy, separate the test set, validate across scenarios, and implement rigorous monitoring and governance throughout the lifecycle.

Practical Recipe: A Minimal End to End Pipeline

A practical end to end workflow starts with defining a clear objective and selecting a suitable data source. Prepare the data with cleaning, normalization, and labeling as needed. Split the data into training and testing sets and choose a simple, robust model. Train with a reasonable number of iterations, monitor performance, and save checkpoints. Deploy to a lightweight serving environment and set up monitoring to detect drift and predict failures. Iterate by retraining on fresh data, validating results, and repeating the cycle. This recipe emphasizes repeatability, testing, and safety at every stage.

The Road Ahead: Trends in AI Programming

The landscape of AI programming continues to evolve with more capable models, better data governance, and stronger emphasis on safety. Expect improvements in automation of data labeling, better tooling for reproducibility, and more transparent evaluation practices. As models become increasingly integrated into daily workflows, organizations will focus on governance, traceability, and ethical considerations to ensure AI benefits are shared broadly. The trend is toward approachable, dependable AI that adapts to diverse contexts while respecting user rights and societal norms.

FAQ

What is the first step in AI programming?

The first step is to frame the problem, define the objective, and gather representative data. This sets the direction for model choice and evaluation.

Start by defining the problem, setting objectives, and collecting representative data.

Why is data quality critical in AI programming?

Data quality directly affects model performance and fairness. Clean, diverse, and well labeled data helps models learn accurately and generalize to real world scenarios.

Data quality drives performance and fairness; better data means better AI.

What learning paradigms are commonly used in AI programming?

Common paradigms include supervised, unsupervised, and reinforcement learning. The choice depends on data availability, task type, and desired outcomes.

You typically choose supervised, unsupervised, or reinforcement learning based on the task.

How do you ensure AI systems remain safe after deployment?

Deployments include monitoring, validation, and governance. Implement drift detection, audits, and user feedback loops to maintain safety and reliability.

Monitor models, audit decisions, and respond to feedback to stay safe.

What ethical considerations should guide AI programming?

Ethical AI programming addresses bias, transparency, privacy, and accountability. Establish policies, document decisions, and involve diverse stakeholders.

Ethics matter; address bias, privacy, and accountability from the start.

What skills help someone learn AI programming?

Key skills include data handling, statistics, programming, and familiarity with ML workflows. Practice with small projects and study current best practices.

Learn data skills, math, programming, and ML workflows with hands on practice.

Key Takeaways

- Define clear objectives before data collection

- Prioritize data quality and governance

- Select models and training methods aligned with task

- Monitor for drift and bias in production

- Emphasize ethics and transparency in AI programming