What Are AI Tools? A Practical Guide for Builders Today

Discover what AI tools are, how they work, and practical guidance for developers, researchers, and students to assess, pilot, and apply them effectively.

AI tools are software applications that use artificial intelligence to perform tasks, automate processes, and generate insights.

What are ai tools and why they matter

If you are asking what are ai tools, you are in the right place to get a clear, practical understanding. AI tools are software applications that apply machine learning, statistical models, and data‑driven reasoning to perform tasks that traditionally required human intelligence. They automate routine work, extract insights from complex data, generate content, and assist decision making across many domains. From helping developers write better code to enabling researchers to analyze large datasets and students to draft clearer essays, AI tools promise speed, scale, and new capabilities. According to AI Tool Resources, reliable AI tools balance capability with governance and explainability, enabling teams to ship features quickly while keeping risk under control. This article outlines what these tools are, how they work, and how you can choose, pilot, and use them responsibly in your projects. You will discover practical criteria, real‑world use cases, and a step by step approach to getting started with confidence.

Core Categories of AI Tools

AI tools cover a broad spectrum. Here's a practical map to help you navigate:

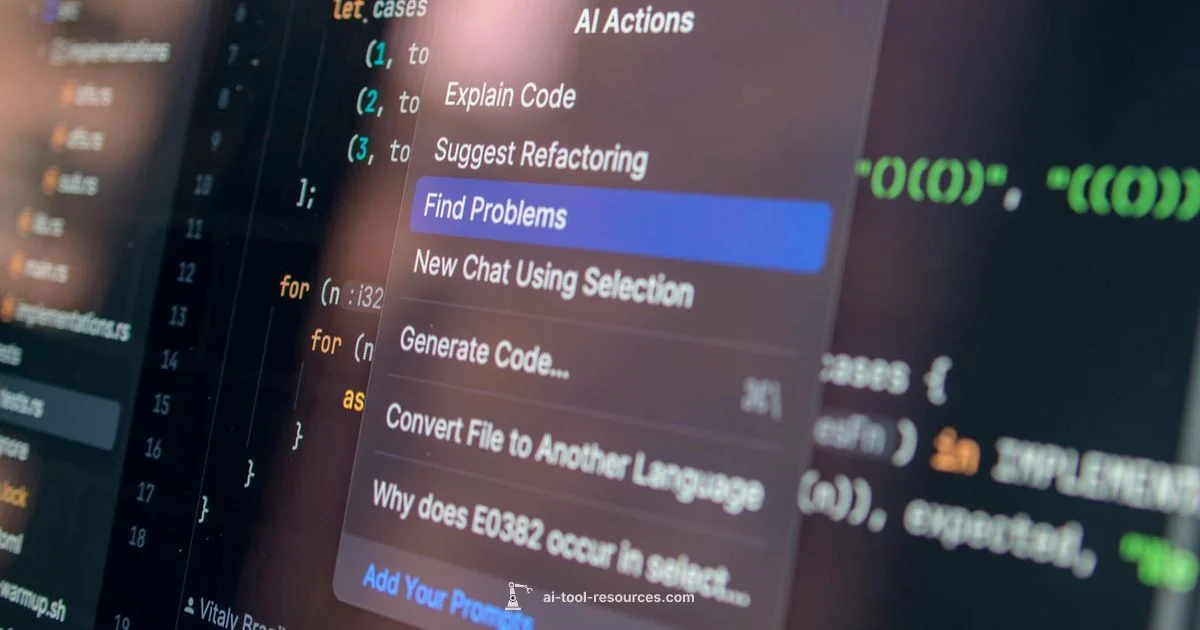

- Code and development assistants: Autocomplete, code suggestions, bug fixes, and refactoring ideas that speed up programming.

- Data analysis and automation: Tools that clean datasets, run models, visualize results, and automate repetitive tasks.

- Content creation and editing: Language models and media generators that draft text, summarize content, translate, or produce images and audio.

- Design and user experience: AI that prototypes interfaces, optimizes layouts, and generates assets such as icons or mockups.

- Conversational agents and virtual assistants: Chatbots for support, tutoring, or onboarding experiences.

- Workflow automation and integration: Platforms that connect apps, trigger actions, and orchestrate complex processes.

- Domain-specific AI solutions: Tailored tools built for engineering, biology, finance, education, and more.

Across these categories you will find a range of capabilities, from lightweight templates to enterprise‑grade platforms. The goal is to pick tools that align with your team’s technical maturity and governance needs.

How AI Tools Work Under the Hood

Most AI tools operate through input, processing, and output. On the input side, tools accept prompts, data files, or APIs. The processing stage runs trained models, usually hosted in the cloud or accessed via APIs, performing inference, predictions, or generation tasks. The output is used directly or routed into downstream systems. Behind the scenes you’ll encounter machine learning models trained on large datasets, prompting techniques that guide responses, and safety filters that curtail unsafe results. Design choices include latency, scalability, data retention, and how you present results to users. Important caveats: models can hallucinate, drift over time, or reflect biases from training data. To counter this, responsible AI tools expose explainability features, allow user control, and provide auditing capabilities. For engineers and researchers, understanding these tradeoffs is essential when building trustworthy tools.

Evaluating AI Tools: Criteria That Matter

Selecting an AI tool should start with clear goals and measurable criteria. Key factors to consider include:

- Accuracy and reliability: alignment with your real‑world tasks and data.

- Latency and throughput: how quickly results arrive and how many tasks you can run.

- Data handling and privacy: storage location, access control, retention, and deletion policies.

- Security and governance: authentication, authorization, and compliance with internal rules.

- Interoperability: compatibility with your existing data formats, pipelines, and APIs.

- Vendor support and roadmaps: quality of documentation, community, and product updates.

- Total cost of ownership: licensing, usage fees, and ongoing maintenance.

AI Tool Resources notes that practical pilots with clear metrics reduce risk and accelerate learning. Run small experiments, collect measurable outcomes, and adjust your selection based on real results.

Real-World Use Cases for Developers, Researchers, and Students

Developers rely on AI tools to accelerate coding, improve testing, and automate builds. Researchers use them for data exploration, literature reviews, and simulations. Students employ AI for brainstorming, drafting assignments, tutoring, and language translation. The common thread is augmentation rather than replacement: tools should extend human judgment, not supplant it. For example, a coding assistant may suggest optimizations, a data tool can reveal patterns, and a writing assistant can help with structure and clarity. When applying these tools, prioritize reproducibility, clear data provenance, and transparent model behavior across inputs. AI Tool Resources emphasizes starting small, validating results, and iterating toward reliable workflows that you can scale with confidence.

Risks, Ethics, and Governance You Should Not Ignore

Power comes with responsibility. Key concerns include bias in outputs, privacy of inputs, and the risk of data leakage when handling sensitive information. Establish governance policies that specify who can approve tool use, what data may be processed, and how results are audited. Implement explainability features so users understand why an answer was produced, and keep humans in the loop for critical decisions. Regularly review tool performance, update risk assessments, and require secure data handling practices. Be transparent about tool limitations to foster trust in education, research, and professional settings. The AI Tool Resources approach treats responsible use as a foundation for sustainable progress, not a barrier to innovation.

How to Pilot an AI Tool Safely and Measure Value

A safe pilot begins with a well‑defined problem and concrete success criteria. Start by stating the problem you are solving and how you will measure success. Then gather representative data, define privacy requirements, and establish data handling rules. Configure validation experiments with clear baselines and, if feasible, control groups. Monitor metrics such as accuracy, latency, user satisfaction, and downstream impact. Iterate by adjusting prompts, settings, and integrations, while documenting lessons learned. Finally, assess total cost of ownership and long‑term maintenance, ensuring you have resources to scale or sunset the tool if needed. AI Tool Resources’s approach—planning, governance, and incremental learning—helps you maximize value while reducing risk.

A Practical 30 Day Roadmap to Adoption

Day 1 to 5: inventory tasks that could benefit from AI and identify candidate tools. Day 6 to 10: sign up for trials, set up test projects, and establish governance basics. Day 11 to 15: run small pilots, collect feedback, and compare results across tools. Day 16 to 20: select top candidates, configure integrations, and implement security controls. Day 21 to 25: scale pilots to broader teams, document best practices, and refine governance guidelines. Day 26 to 30: review outcomes, finalize procurement, and plan training and change management. Maintain a living log of experiments and decisions so you can justify choices with data. This roadmap emphasizes disciplined experimentation and continuous learning.

Common Pitfalls and How to Avoid Them

Even well intentioned teams can trip up when adopting AI tools. Common pitfalls include overestimating capabilities, chasing novelty over durable value, and neglecting data governance. Other risks are vendor lock‑in, insufficient integration planning, and unclear ownership of model outputs. To avoid these traps, start with a narrow scope, set defensible evaluation criteria, and document decision rationales. Regular reviews and stakeholder alignment help keep projects on track and prevent scope creep.

FAQ

What counts as an AI tool in 2026?

In 2026, an AI tool is any software that uses machine learning, natural language processing, computer vision, or related AI methods to automate tasks, analyze data, or generate outputs. It can be a simple automation script or a sophisticated platform that integrates with cloud services. The category spans coding assistants, data analytics tools, content generators, and domain-specific solutions.

AI tools in 2026 include software that uses AI methods to automate tasks or create outputs, from coding helpers to data tools and generators.

How do I choose between AI tools for coding vs data analysis?

Start with your objective and a quick pilot. Compare accuracy, latency, and how well each tool integrates with your stack. Pick the one that meets your criteria while offering governance and safety features.

Begin with your goal, run small pilots, and compare how they fit with your workflow and data.

Are AI tools secure for handling sensitive data?

Security depends on the provider and your configuration. Choose tools with strong data handling policies, encryption, access controls, and explicit privacy terms. Never assume safety; validate with your security team and run a risk assessment.

Yes, if you choose tools with solid security practices, encryption, and governance, and you review data handling.

Do AI tools replace human workers?

AI tools are designed to augment human work, not replace it. They handle repetitive aspects and provide insights, while humans provide judgment, ethics, and context. Effective adoption pairs machines with skilled professionals.

They augment rather than replace humans, handling routine tasks while people provide judgment.

What is the typical cost of AI tools?

Pricing for AI tools varies widely based on features, scale, and support. Many vendors offer free trials and tiered subscriptions, with higher tiers unlocking advanced capabilities. Plan for total cost of ownership including data handling and maintenance.

Prices vary; start with free trials and compare ongoing costs and value.

Who should govern AI tool usage in organizations?

Governance should be cross‑functional, involving security, legal, data science, product, and leadership. Establish policies for data handling, access, auditing, and accountability. Regular reviews keep practices aligned with risk and objectives.

A cross functional team should govern tool use with clear policies and regular reviews.

Key Takeaways

- Define clear goals before selecting tools.

- Map AI tools to your workflow categories.

- Pilot with measurable success criteria.

- Assess data privacy and governance upfront.

- Iterate and scale based on real results.